Don't test your code.

Test user frustration.

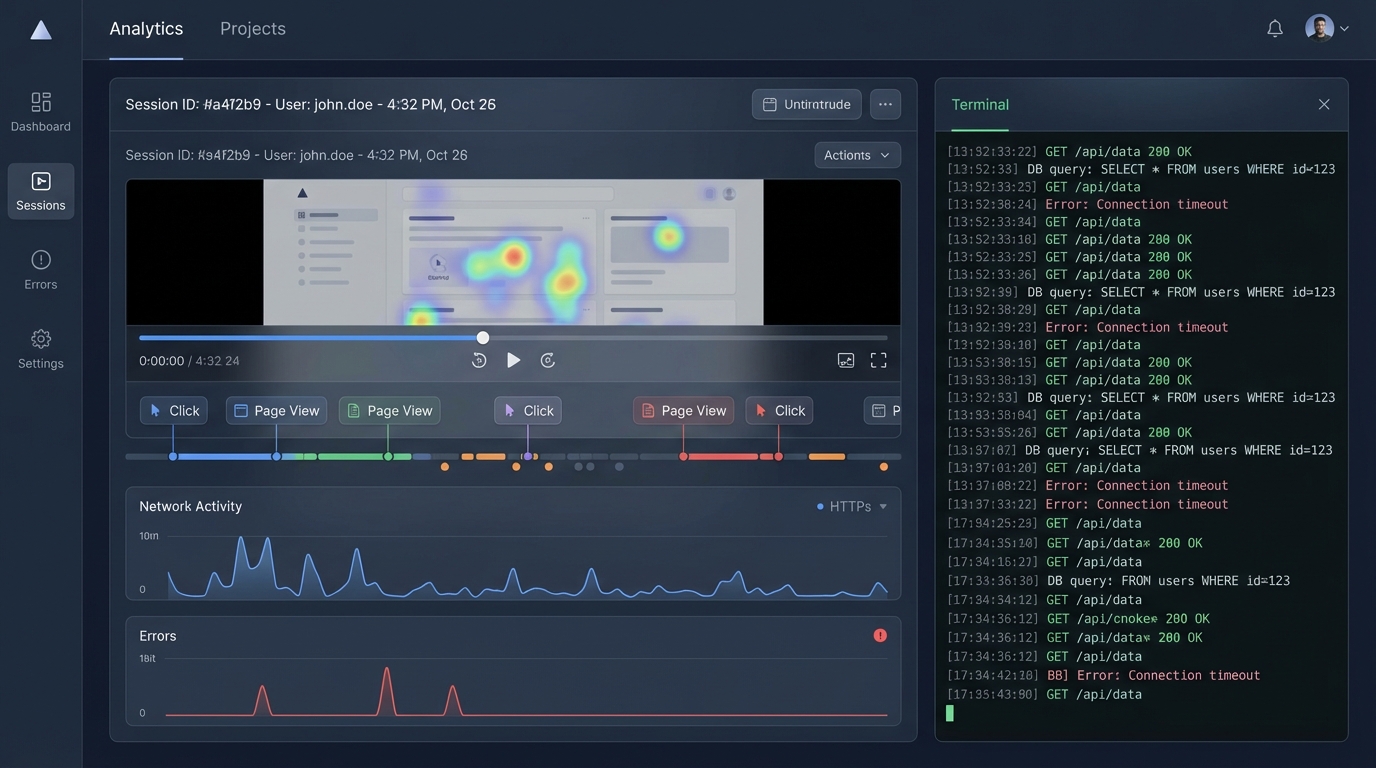

Appev deploys autonomous AI agents to stress-test your UX. They act like real humans—confused, impatient, and chaotic—to find the bugs that scripts miss.